skills used/developed

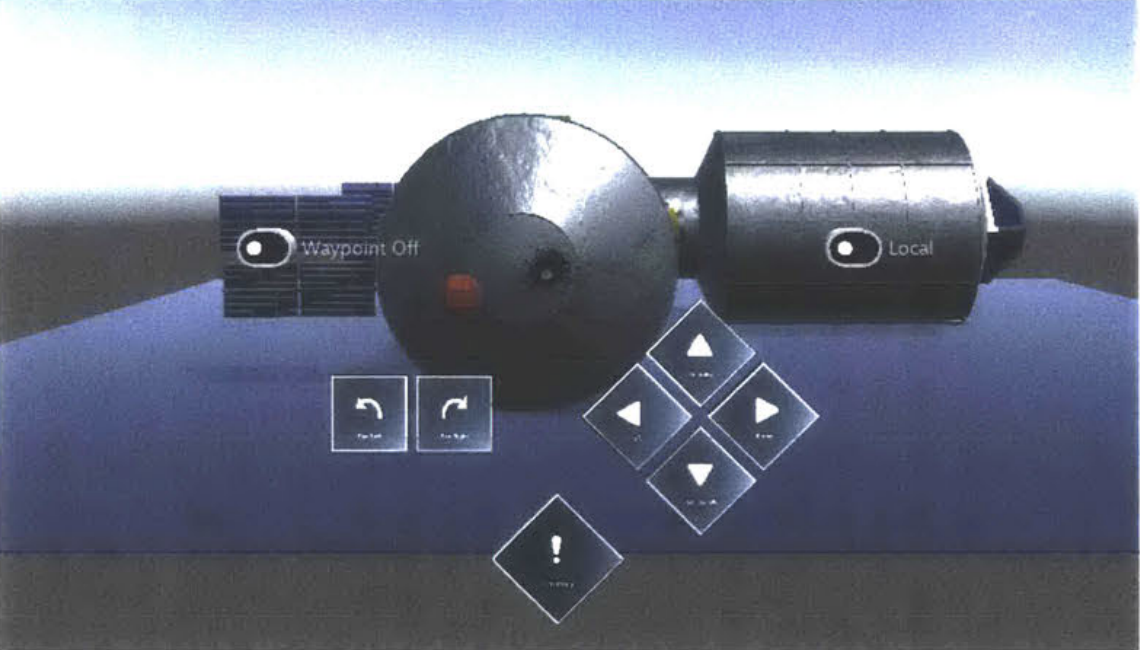

- Unity/C#

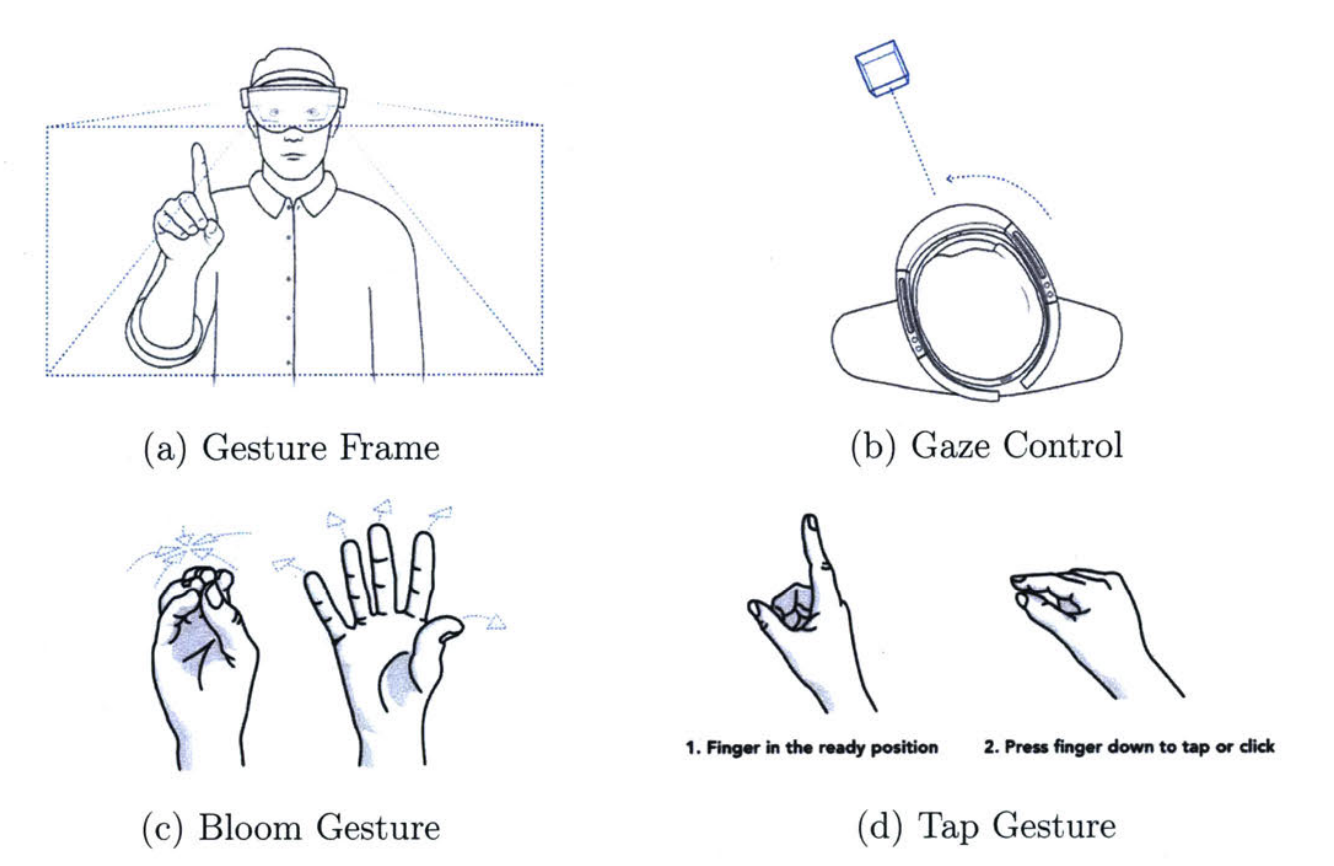

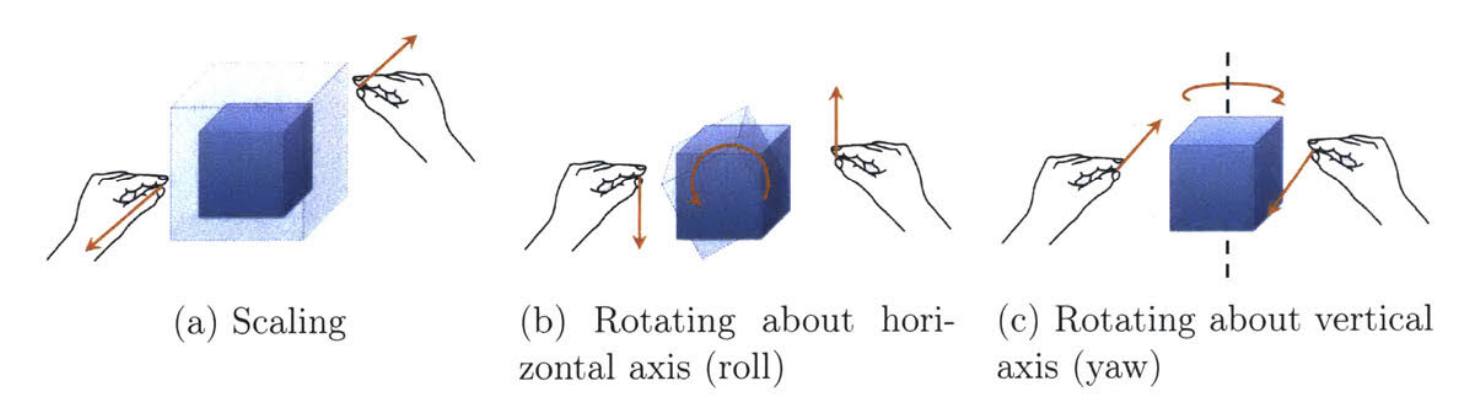

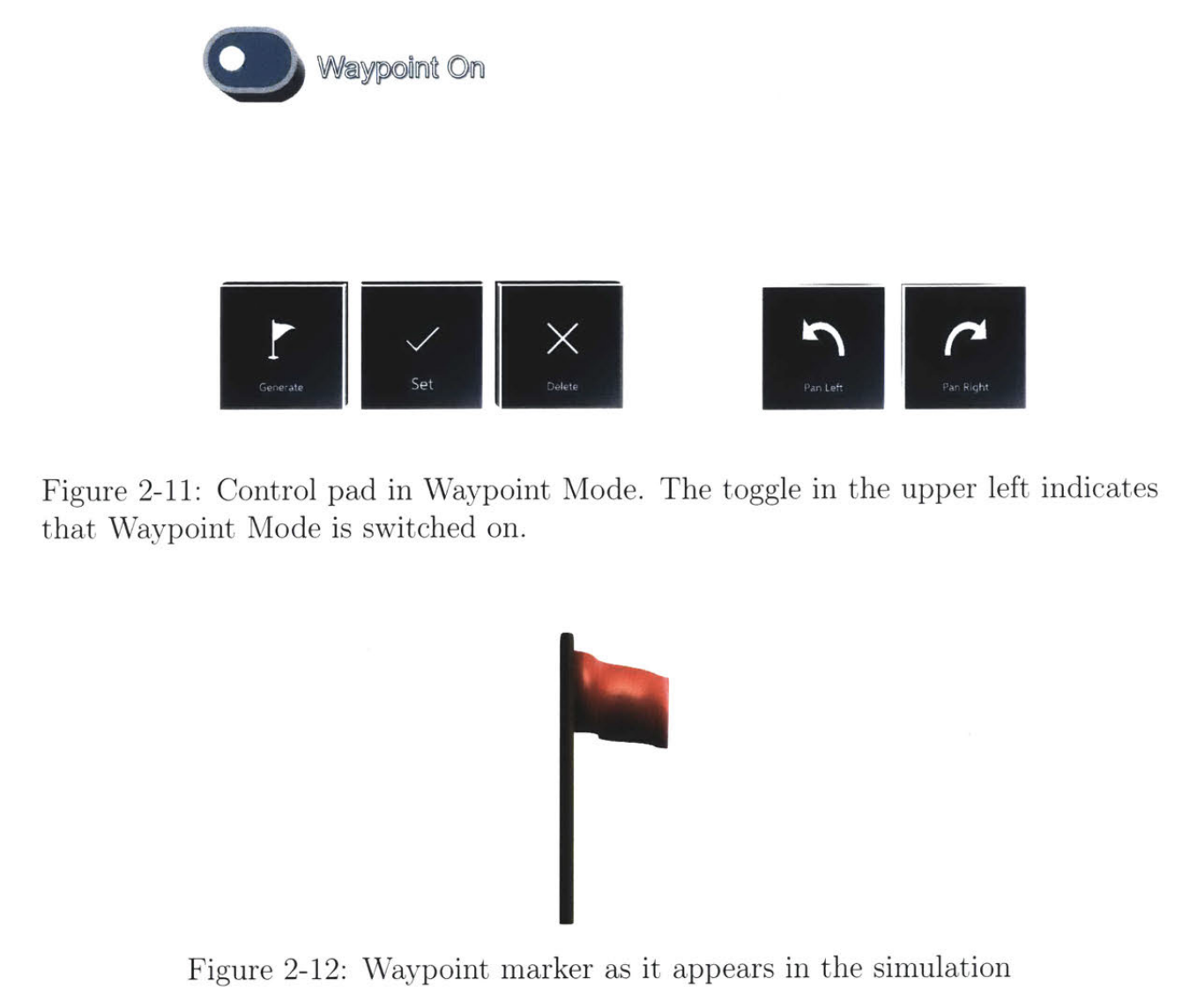

- Augmented Reality

- Microsoft HoloLens

- Experiment Design

- Human Factors Engineering

- Human-centered Studies

- Training Program Design